About Me

Hi there, this is Jun Wang. Welcome to my website. I'm an AI researcher. I love programming but I prefer training models to write code for me..

I am a Staff Research Scientist at ByteDance Seed working on foundational model training. Prior to that I was a Senior Applied Scientist at Amazon, specializing in foundational model pre-training, post-training, and reinforcement learning for complex reasoning tasks. I built the very first generation LLMs at Amazon (AWS Titan). My expertise spans the full stack of LLM development, including data curation, pretraining, SFT, and RLHF. I have experience training both text and code models. At AWS, I had focused on deep RL for math, code generation tasks.

At Seed, I’ve contributed to open-source RL project – VeRL and most recently I’ve been working on full stack video generation training.

Opinions are my own and I do not speak for my employer or my team. On this webpage, I'd like to share my study and my paper reading. Feel free to reach out to me if you have any comments/suggestions

In my spare time, I like hiking a lot. I'm also very much into reading books of history, biography etc. Drop me a line if you want to chat more!

All my publications can be found on google scholar

Professional Services

- Reviewer for ACL ARR 2024, EMNLP 2025, ICLR 2025

- Reviewer for NeurIPS, ICLR 2023

- Reviewer for AMLC 2020, 2021

- Reviewer for ICLR 2022

- Reviewer for AAAI 2021

I served as the Amazon Research Award reviewer and was an AC for AMLC conference at Amazon.

Miscellaneous

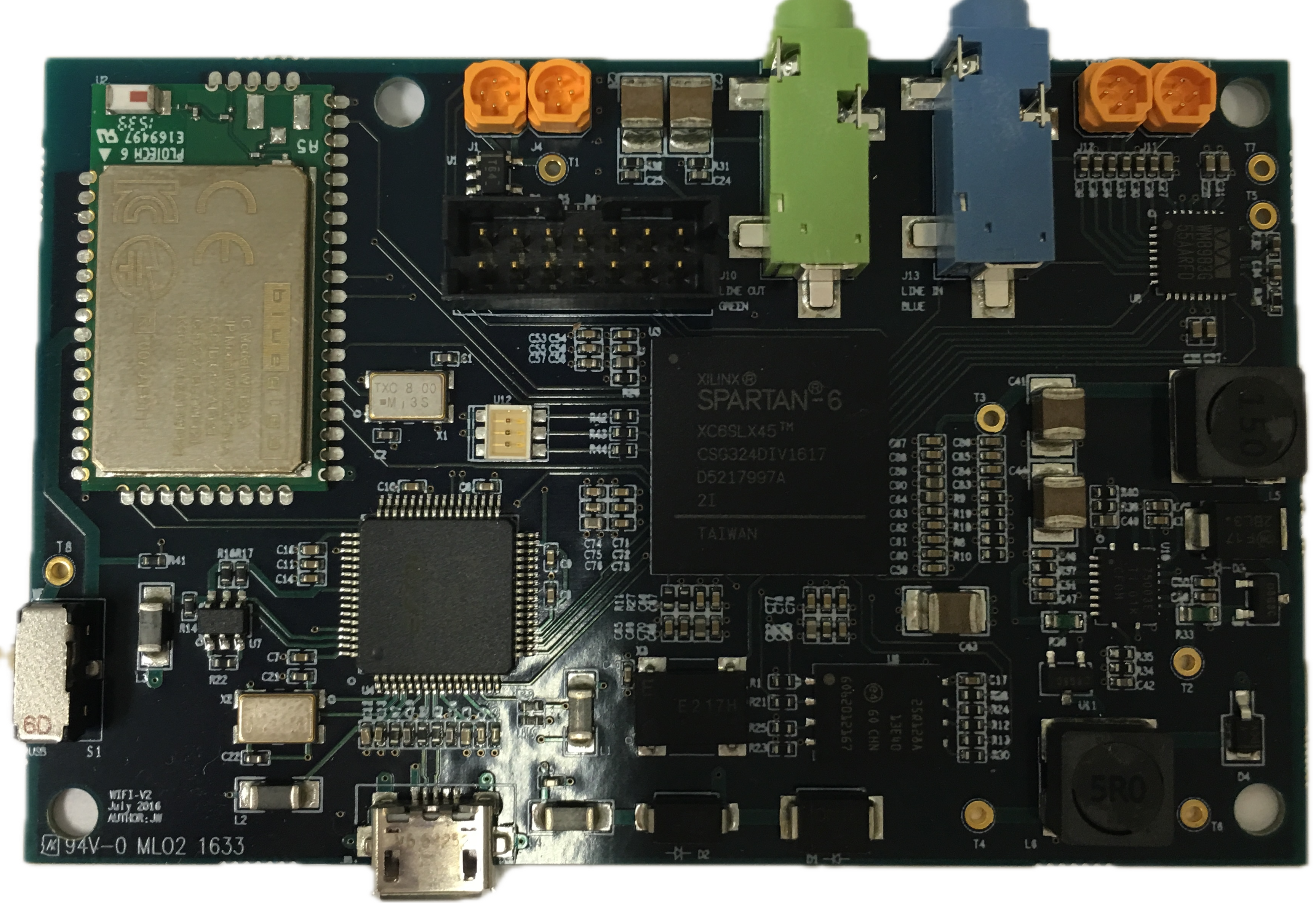

During my studies at school, I trained as an electrical engineer with a focus on integrated circuit design, including analog, RL, and power-management circuits. I was also proficient with Verilog HDL for signal processing. The project shown below is a PCB I designed featuring a Xilinx FPGA and a WiFi module for real-time speech signal processing while I was at UT Dallas.

FPGA Speech Processing Platform for Cochlear Implant

FPGA Speech Processing Platform for Cochlear Implant

...